1. INTRODUCTION

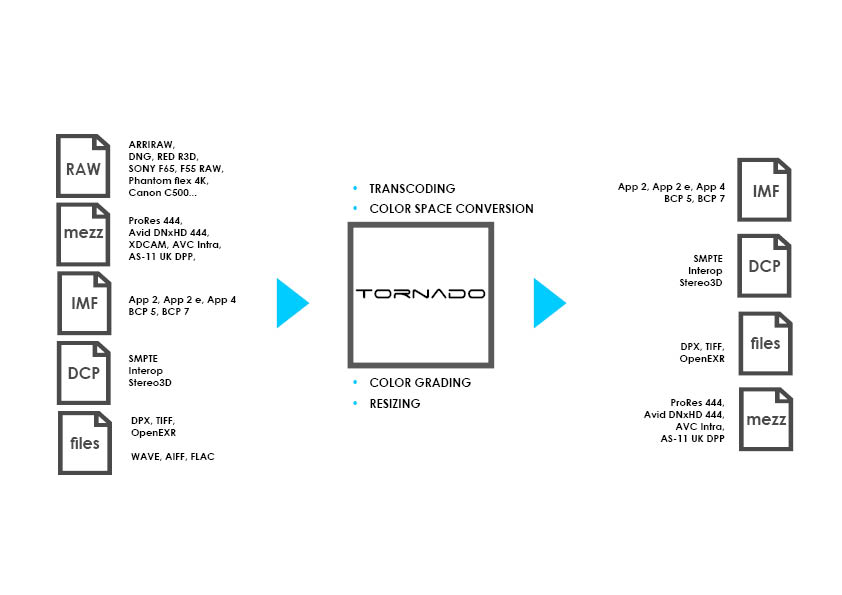

TORNADO is a transcoding engine designed to be integrated in the facilities’ infrastructure: Film labs, post houses, VFX studios or OTT vendors can build their own automated transcoding workflows using TORNADO’s API.

This document describes the possible integrations with TORNADO.

1.1. Legal Information

1.1.1. Copyright Notice

All rights reserved. No part of this document may be reproduced, copied or transmitted in any form by any means electronic, mechanical or otherwise without the permission of Marquise Technologies sàrl. If you are interested in receiving permissions for reproduction or excerpts, please contact us at contact@marquise-tech.com

1.1.2. Trademarks

Marquise Technologies, the logo and TORNADO are trademarks of Marquise Technologies sàrl.

All other trademarks mentioned here within are the property of their respective owners.

1.1.3. Notice of Liability

The information in this document is distributed and provided “as is“ without warranty.

While care has been taken during the writing of this document to make the information as accurate as possible, neither the author or Marquise Technologies sàrl shall not be held responsible for losses or damages to any person or entity as a result of using instructions as given in this document.

2. WORKING WITH TORNADO

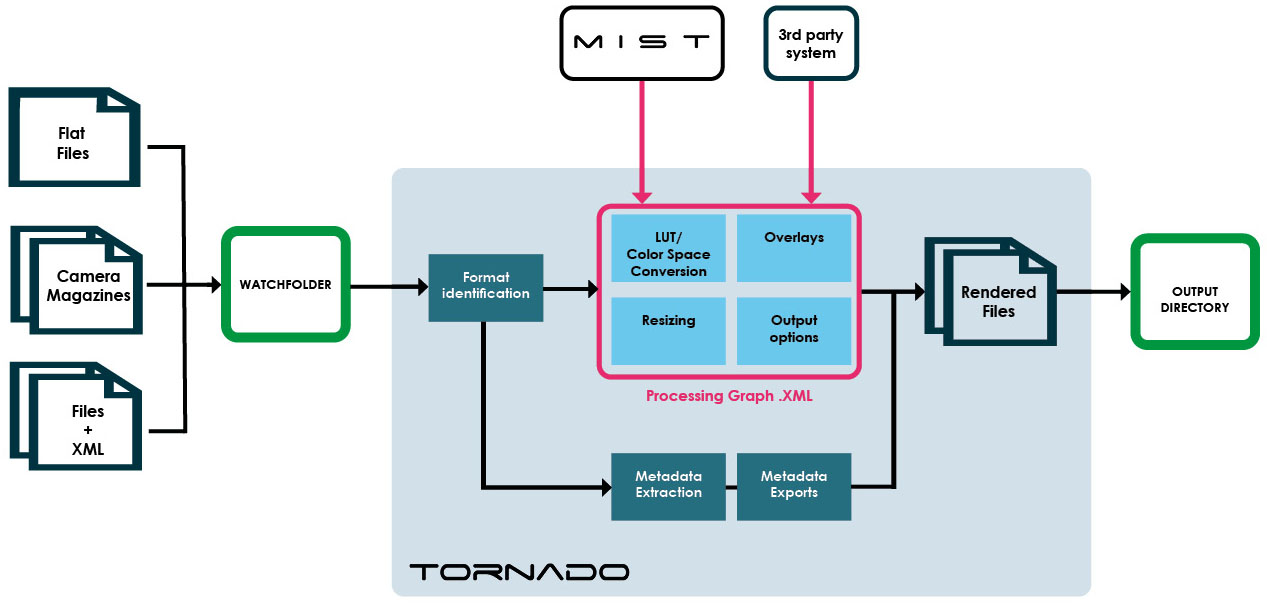

There are different ways of working with TORNADO.

It’s aim is to be driven through the REST API by a 3rd party system, but it ca also be used in conjunction with Marquise Technologies’s MIST Mastering system.

2.1. Using STORM API

TORNADO uses Marquise Technologies' STORM API and provides 2 ways of communication with the application:

-

A Command Line Interface

-

A REST Interface

See below’s chapters for the Storm API documentation.

2.2. Using MIST

The interaction between MIST and TORNADO can be for two types of operations:

2.2.1. MIST to control TORNADO

TORNADO can be remotely controlled by MIST in order to offload the encoding process from the mastering station.

In this user case, the operator prepares all the rendering and delivery operations using MIST. When ready, the information is sent to TORNADO that will follow the instructions given by MIST.

2.2.2. MIST to create the Processing Graphs for TORNADO

The Processing Graph is an XML file describing all the operations to be performed by TORNADO. This file can either be created by the user using a 3rd party solution, or using MIST.

In this last case, the operator creates the necessary rendering and delivery templates using MIST GUI and saves them.

The resulting XML file is a Processing Graph that can be used later by TORNADO.

3. POSSIBLE OPERATIONS

-

Creating a job in TORNADO

-

Managing jobs in TORNADO

-

Processing Functionalities

3.1. Creating a job in TORNADO

The creation of a job in TORNADO is a step by step process.

| Step 1 |

Selection of the input folder |

| Step 2 |

Selection of the Processing Graph |

| Step 3 |

Selection of the output folder |

| Step 4 |

Start of the job |

3.2. Managing jobs in TORNADO

TORNADO allows the management of the jobs: Using Storm API functions like Aborting, Pausing and Deleting are available, together with the list of jobs and their description.

3.3. Processing Functionalities

User’s can build their Processing Graphs using the available functionalities in TORNADO.

Some examples of the functions are listed below.

3.3.1. Source identification

-

Recognition of source format

-

Identification of directory structure (i.e. IMF package, camera magazine)

-

Extraction of Source metadata

-

Essence metadata

-

Container metadata

-

Format specific metadata (sidecar XMLs)

-

3.3.2. LUT application

TORNADO can apply a LookUpTable created by the user.

Supported formats for the LUTs:

-

3DL (i.e from Autodesk, Scratch)

-

.cube (Resolve)

-

XML (i.e. from ARRI)

-

ACES Common LUT Format

3.3.3. ACES workflow

TORNADO supports ACES color space workflow.

It is possible to select an ACES IDT (Input Device Transform) and a ODT (Output Device Transform).

3.3.4. Resizing

TORNADO provides resizing preset capabilities with resolutions ranging from iPhone to Full Aperture 4K.

Automatic Fitting, Mirroring, Linear and Cubic minification and magnification filters are part of the toolset.

3.3.6. Overlays (Burn-Ins)

TORNADO can apply overlays on the image. The overlays are user-defined XML files (see API documentation).

3.3.7. Output formats

TORNADO supports a variety of output formats (see the Appendix “File Formats Support”).

The desired Container, Video Codec and Audio formats are user’s defined XML file.

Additional information like Naming convention, TimeCode information or custom annotations can also be set as presets.

Outputs can be a single file, multiple files, or a chain of files.

3.3.8. Validation

TORNADO provides IMF and DCP Validation. The result of the validation can be exported in XML.

4. INSTALLATION

-

Hardware Recommendations

-

About Media processing with TORNADO

4.1. Hardware Recommendations

The performances of TORNADO for processing and encoding media highly depend on the capabilities of the hardware chosen. Please make sure to select the server according to your needs.

| Operating SySTEM |

Microsoft Windows 64 bit |

| Supported GPU |

TORNADO supports the following NVIDIA Cuda GPUs: P4000, P5000, P6000, GTX 1080, GTX 1080ti, RTX 4000, RTX 6000, RTX 2080, RTX 2080ti, RTX 3080, RTX 3080ti, RTX A6000. |

| CPU |

A dual CPU configuration is preferred. |

4.2. About Media processing with TORNADO

Depending on the type of format chosen in source and output, TORNADO will use either the CPU or the GPU to decode/encode the file. The default settings for the encoders and decoders can be configured by editing TORNADO’s tornado.cfg%programdata%/Marquise Technologies/STORM/session

Below is an example of a configuration file where the JPEG2000 encoder and decoder are set to use CUDA acceleration and the H264 one is set to CPU:

<?xml version="1.0" encoding="UTF-8" ?>

<MTSessionConfig>

<DecoderAccelerationConfigList>

<JPEG2000>

<Architecture>cuda</Architecture>

</JPEG2000>

<H264>

<Architecture>cpu</Architecture>

</H264>

</DecoderAccelerationConfigList>

<EncoderAccelerationConfigList>

<JPEG2000>

<Architecture>cuda</Architecture>

</JPEG2000>

<H264>

<Architecture>cpu</Architecture>

</H264>

</EncoderAccelerationConfigList>

<ProjectsRepository/>

</MTSessionConfig>|

The possible values for the |

TORNADO’s decoding and encoding speed will be function of the performances of the CPU and GPU, as well as the disks bandwidth.

If a lot of JPEG2000 processing is foreseen, you may use additional GPU(s) for parallel transcoding.

|

The control of additional GPU requires the option MTSW-GPU-C-2 1 Additional Stream of GPU for parallel transcoding. |

5. MONITORING GUI

TORNADO offers a web user interface in order to monitor the job processes.

5.1. Access TORNADO GUI

After the installation of TORNADO, in your web browser enter the following address:

-

if you access TORNADO GUI from the system it is installed on: http://localhost:8080/gui

-

if you access TORNADO GUI from another computer: http://<ip_or_domainname>:<port_number>/gui

5.2. System

This page allows you to connect your TORNADO and see useful information.

| Manager URL |

Enter the URL address of the system TORNADO is installed on and click Connect. |

| Registered Workers |

Displays every workers currently installed |

| System Status |

Displays the actual status of TORNADO. Status could be Connecting, Offline, Running |

| Jobs in Queue |

Display the number of jobs currently in Process, in Error, Paused or Queued |

| Processing GPUs |

Display the type of each GPU recognized by TORNADO |

| Version |

Display the current TORNADO software version running |

| License |

Display the status of TORNADO’s license: "unknown", "active", "expired", "unlicensed" |

5.3. Queue

This page displays the list of jobs sent during the current session.

-

Click on a Job to display additional details, including specific Logs for the job.

|

Logs are only available when a warning or an error has been raised |

-

Export the logs of a job: open the log and click on the button Export Log. This will save a .txt file at the location of your choice.

STORM API

Version 2021

Application Programming Interface

1. About STORM API

STORM is the name of the engine powering all the software applications of Marquise Technologies. It’s API is described in the following sections:

-

Operational Contexts

-

Command Line Interface

-

REST API

-

STORM API References

2. Operational contexts

Each job supported by STORM will require an operational context as the main parameter. The context defines the kind of task that must be performed.

When using the command line interface, the context is the first parameter that is mandated. It also defines the following accepted parameters and options. Below is an example for the usage of the context for the command line interface:

2.1. Command Line Interface example

stormserver mediainfo -i "E:/SOURCE/testFile.mxf" -format pdf -o "E:/RESULT/testFileInfo.pdf"The exact syntax for the command line interface is explained in the following sections.

2.2. REST API example

When adding a job, the JSON parameters must contain the context definition, otherwise the job will not be added to the queue. Here is an example of how a job context is identified in the JSON parameters:

{

"context" : "mediainfo",

"input" : "E:/SOURCE/testFile.mxf",

"output" : "E:/RESULT/testFileInfo.pdf",

"format" : "pdf",

...

}2.3. Operational Contexts

The values for the context are described in the table below:

| Context | Details |

|---|---|

analayze |

Creates a content analysis report |

enum |

Enumerates the various entities supported by the API such a mastering formats, codecs, etc |

filecopy |

Copies a file from source to destination |

filemove |

Moves a file from source to destination |

ftpdownload |

Downloads a file from a FTP source to a local repository |

ftpupload |

Uploads a file from a local repository to a FTP destination |

help |

Displays the command line interface usage instructions |

masterinfo |

Create a mastering information report in various file formats |

mediainfo |

Create a media information report in various file formats |

validate |

Creates a QC report for a master in various file formats |

xcode |

Transcode a file, a composition or a package into various file formats |

websrv |

Start the STORM API server as a webservice |

webwrk |

Start the STORM API server as a webworker |

For each of the above operatinal contexts, a set of parameters are defined.

2.3.1. xcode Operational Context parameters

| Parameter Name | Required/Optional | Details |

|---|---|---|

input |

Required |

Absolute file name of the source file in one of the remote mounted volumes (e.g. "F:/MEDIA/test.mxf"). |

output |

Required |

Absolute file name of the destination file in one of the remote mounted volumes (e.g. "G:/RENDERS/result.mov"). |

format |

Optional |

Defines the container format (e.g. "quicktime"). |

preset |

Optional |

Absolute file name of the XML preset to be used for the output file. It is mutually exclusive with the format parameter. |

2.3.2. websrv Operational Context parameters

| Parameter Name | Required/Optional | Details |

|---|---|---|

workers |

Optional |

Used to specify the number of worker nodes to be launched as local sub-processes by this node. |

service |

Optional |

If specified than the node can be installed as a system service. |

2.3.3. webwrk Operational Context parameters

| Parameter Name | Required/Optional | Details |

|---|---|---|

address |

Required |

Specifies this node’s URL e.g. "http://127.0.0.1" |

port |

Required |

Specifies this node’s communication port. |

nodeaddress |

Required |

Specifies the manager’s node URL with which the worker node should register e.g. "http://127.0.0.1:8080" |

2.3.4. Enumerable entities

| Option Name | Details |

|---|---|

masters |

Displays a list of available master format names |

formats |

Displays a list of available output file format names |

specifications |

Displays a list of available specifications |

shims |

Displays a list of available shims |

shimversions |

Displays a list of available shium-versions |

3. Command Line Interface API

Storm can be used via the command line interface (CLI) just like any other operating system command. This interface is simple and practical for integration into scripts for batch processing and is supported by most of the interpreted languages (e.g. PHP, etc).

The syntax of the command line interface follows a structure based on contexts, actions, objects and options. The general syntax can be described like this:

stormserver [context] [action] [object 1] .. [object n] [option 1] .. [option n]Example 1:

stormserver enum -mastersThe example above enumerates the possible mastering formats.

Example 2:

stormserver mediainfo –i “E:/video.mov” –format “xml” –o “E:/metadata.xml”The example above extracts the metadata from the file “E:/video.mov” into the file “e:/metadata.xml”.

|

Please note that the double quotes around options are only necessary when options contain white spaces or characters that would be forbidden in the direct syntax of a regular command line on the operating system. In most cases they can be omitted, however it is always safe to use them. |

3.1. Using the CLI on Microsoft Windows systems

To use the command line interface on Microsoft Windows systems, the following steps must be folloed:

-

Press the Windows key and R (for run)

-

When the prompt window appears (next to the Windows Start button), type cmd (for command) and press Enter. The console/command line tool should be displayed.

-

Go to the installation directory using the cd command (change directory):

E:\>cd "Marquise Technologies\x64"Note that if the installation drive is different than the current drive, the current drive (volume) must be changed by typing the drive/volume letter followed by the column character:

Change current drive

C:\Users\marquise>e:Change current directory

E:\>cd "Marquise Technologies\x64"The last step is to type the STORM Server command as illustrated above, followed by the operational context and the list of parameters for the context. After this sequence, STORM Server would start in the specified operational context and will continue with the requested processing.

4. REST Interface

The STORM API can be accessed via the REST (REpresentational State Transfer) interface which allows a scalable, service-oriented use. This interface is simple and practical for integration into scripts for batch processing and is supported by most of the interpreted languages (e.g. PHP, etc).

The STORM API REST interface is implemented via the HTTP protocol. Commands are sent as JSON structured requests.

In the following documentation, cURL is used as a tool to demonstrate the HTTP requests to the STORM API server. cURL is multi-platform free tool and can be obtained here:

4.1. Starting the server

In order to start STORM Server as a REST web service, you must use the following command:

stormserver websrvThe command above will start the STORM server as a web service listening for HTTP requests on port 8080 by default. This will result in the following:

STORM v2021.1.5.0

(C) 2009-2021 Marquise Technologies

Release Date Jan 5 2021

start threadpool

starting up server... done!

listening on port 8080

waiting requests...At this point the server will keep running until the /control/shutdown request is received or the command line process is killed.

If you wish to change the communication port, the following command must be used:

stormserver websrv –port 1234STORM Server can also be installed and started as a system service. In order to do so you must specify the -service

The following example uses the Windows sc.exe service manager to install then start STORM Server as a service:

sc create "STORMSERVER" binPath="stormserver websrv -service"

sc start "STORMSERVER"4.2. How jobs are managed

Storm processes media via a list of queued jobs. These jobs can be created directly via the API, or can be spawned automatically by a watchfolder that is actively monitored by a specific node.

Regardless of the manner in which the job has been created, once the job has been added to the queue of jobs, it can be further manipulated like any other regular job.

4.3. Processing graphs

Processing graphs are complex XML descriptions of various image processing, audio processing and transcoding functions that Storm can apply to the media that needs to be processed.

The processing graph can describe one or more outputs, each of the output being the result of a list of daisy-chained processing nodes.

The following example shows a processing graph document basics (incomplete):

<?xml version="1.0" encoding="UTF-8" ?>

<mtprocessinggraph>

<nodelist>

<node uuid="12d42cf7-76ea-42ba-bc39-40e684176b08" type="source">

...

</node>

</nodelist>

<linklist>

<link target="13015df7-a165-47a5-9061-50ce646dcfed" input="0" source="12d42cf7-76ea-42ba-bc39-40e684176b08" output="0"/>

...

</linklist>

</mtprocessinggraph>Each node has a unique id, a type and a list of type-specific parameters

4.3.1. Node linking

The processing order of the nodes is dictated by a list of links that connect the various nodes involved in the processing of an output. This mechanism allows a node to be used in multiple processing combinations within the same graph.

Each link has a target node id (i.e. the next node in the processing chain), as well as an input number. The number of inputs depends on the target, however most nodes have only one input and therefore the input number is usually 0.

In addition to the target description, each link has a source node id (i.e. the previous node in the processing chain), as well as an output number. The number of outputs depends on the source, however most nodes have only one output and therefore the output number is usually 0.

4.3.2. Processable nodes

Only output nodes are actually processed by Storm. Nodes that are not connected to an output are ignored and do not generate any processing.

4.3.3. Processing Graph example

The following example describes a full processing graph with two processing nodes and one ouput node. Also, please note the top node of the type "source", which describes the input media.

<?xml version="1.0" encoding="UTF-8" ?>

<mtprocessinggraph>

<nodelist>

<node uuid="12d42cf7-76ea-42ba-bc39-40e684176b08" type="source"/>

<node uuid="13015df7-a165-47a5-9061-50ce646dcfed" type="lut">

<annotation>Untitled Output</annotation>

<filename>E:\Marquise Technologies\x64\luts/HDR 1000 nits to Gamma 2.4.cube</filename>

</node>

<node uuid="97ce96df-08f4-4e9e-8fb9-9e4222c687d2" type="imageResize">

<annotation>Untitled Output</annotation>

<width>1920</width>

<height>1080</height>

<aspectratio>0</aspectratio>

<fit>3</fit>

<flip>false</flip>

<flop>false</flop>

<expandmethod>0</expandmethod>

<compressmethod>0</compressmethod>

</node>

<node uuid="29b7adb8-24b8-4d30-9420-b436eb6b44fa" type="output">

<annotation>Untitled Output</annotation>

<directory>$ProjectName$/$CompositionName$</directory>

<filename>$ClipName$</filename>

<container>mxfop1a</container>

<video>

<channel>0</channel>

<codec>dnxhd</codec>

<quality>hqx</quality>

<bitdepth>10</bitdepth>

<colorprimaries>CIEXYZ</colorprimaries>

<transfercharacteristic>SMPTE.ST2084</transfercharacteristic>

<codingequations>ITU-R.BT.2020</codingequations>

<coderange>full</coderange>

<ST2086Metadata enabled="false">

<MaximumLuminance>0</MaximumLuminance>

<MinimumLuminance>0</MinimumLuminance>

<WhitePointChromaticity>

<x>0.333333</x>

<y>0.333333</y>

</WhitePointChromaticity>

<Primaries>

<ColorPrimaryRed>

<x>0.333333</x>

<y>0.333333</y>

</ColorPrimaryRed>

<ColorPrimaryGreen>

<x>0.333333</x>

<y>0.333333</y>

</ColorPrimaryGreen>

<ColorPrimaryBlue>

<x>0.333333</x>

<y>0.333333</y>

</ColorPrimaryBlue>

</Primaries>

</ST2086Metadata>

</video>

<audio>

<byteorder>little</byteorder>

<soundfieldlist>

<soundfield config="51" downmix="no">

<name>surround mix</name>

<language>ja</language>

<mapping/>

</soundfield>

<soundfield config="ST" downmix="no">

<name>stereo downmix</name>

<language>ja</language>

<mapping/>

</soundfield>

</soundfieldlist>

</audio>

<timecode>

<tcorigin>0</tcorigin>

<tcstart>00:00:00:00</tcstart>

</timecode>

</node>

</nodelist>

<linklist>

<link target="13015df7-a165-47a5-9061-50ce646dcfed" input="0" source="12d42cf7-76ea-42ba-bc39-40e684176b08" output="0"/>

<link target="97ce96df-08f4-4e9e-8fb9-9e4222c687d2" input="0" source="13015df7-a165-47a5-9061-50ce646dcfed" output="0"/>

<link target="29b7adb8-24b8-4d30-9420-b436eb6b44fa" input="0" source="97ce96df-08f4-4e9e-8fb9-9e4222c687d2" output="0"/>

</linklist>

</mtprocessinggraph>4.4. Working with watchfolders

Storm can handle a list of watchfolders that are actively monitored.

Every new entry (i.e. new file or new directory) into one of the monitored watch folders is checked by Storm against a list of filters attached to a watchfolder.

If the entry matches one of the filters, then a new job is automatically added to the queue of job. The job is described by a processing graph, associated with the watchfolder.

4.4.1. How watchfolders spawn jobs

As described previously, watchfolders monitor specific file system locations for incoming media. Once a media has been accepted by one of the filters associated with the watchfolder, a job is automatically spawned.

The job will process the media using a processing graph or a preset, associated with the watchfolder, when the watchfolder is created.

Once the job is spawned, it is automatically queued to the list of jobs and therefore can be managed as any regular job.

4.5. Compositions

Compositions are XML timeline descriptions of various audio, video, subtitles and data elements synchronized together. Storm can process complex compositions and apply various processing operators onto them and generate multiple outputs.

4.5.1. Composition example

The following example shows a simple composition document (incomplete):

<?xml version="1.0" encoding="UTF-8"?>

<axf>

<project>

<name>Simple</name>

<format>

<width>3840</width>

<height>2160</height>

<aspectratio>1:1</aspectratio>

<framerate>24:1</framerate>

<cms>

...

</cms>

</format>

<composition>

<name>simple</name>

<type>2</type>

<in>0</in>

<out>750</out>

<format>

<width>1920</width>

<height>1080</height>

<aspectratio>1:1</aspectratio>

<framerate>25:1</framerate>

<cms>

...

</cms>

</format>

<soundfieldlist>

<soundfield>

<audioconfig>LtRt</audioconfig>

<mapping>

...

</mapping>

</soundfield>

</soundfieldlist>

<uid>0c85ed19-fb97-405d-ac6b-8c7057cee84a</uid>

<media>

<video>

<layer>

<name>V1</name>

<v1>

<segment type="clip">

<name>Clearcast 30sec AD FINAL_1080p_full</name>

<uid>46c307d6-e5b5-47b0-ac7a-b7d2f0307861</uid>

<in>0</in>

<out>750</out>

<source>

<refname>/Clearcast 30sec AD FINAL_1080p_full.mov</refname>

<url>F:\Adstream\Clearcast 30sec AD FINAL_1080p_full.mov</url>

<in>0</in>

<out>750</out>

</source>

</segment>

</v1>

</layer>

</video>

<audio>

<layer>

<name>Lt</name>

<routing>

<channel>0</channel>

</routing>

<audiotrack>

<segment type="clip">

<name>Clearcast 30sec AD FINAL_1080p_full</name>

<uid>43564d4b-2ddc-4938-b52e-755867df3bc1</uid>

<in>0</in>

<out>750</out>

<groupid>0</groupid>

<source>

<refname>/Clearcast 30sec AD FINAL_1080p_full.mov</refname>

<url>F:\Adstream\Clearcast 30sec AD FINAL_1080p_full.mov</url>

<in>0</in>

<out>750</out>

<routing>

<channel>0</channel>

</routing>

</source>

</segment>

</audiotrack>

</layer>

<layer>

...

</layer>

<subtitles>

...

</subtitles>

<auxdata>

...

</auxdata>

</media>

</composition>

</project>

</axf>Compositions can have multiple tracks, multiple layers and can deal with complex editing information, just like any NLE timeline.

4.6. Output presets

Output presets are XML descriptions that define the output format for the input media passed to Storm server. They are templates that can be reused when transcoding.

The following example shows a output preset that describes an MP4 container with a h264 encoded video with a 720p resolution:

<?xml version="1.0" encoding="UTF-8" ?>

<MasterDeliverySpecification>

<Name>720p_H264_MP4_noTCStart</Name>

<Type>MPEG4</Type>

<Specification>custom</Specification>

<Shim>isobmff.mp4</Shim>

<ShimVersion>default</ShimVersion>

<Presentation>monoscopic</Presentation>

<Video>

<FrameWidthList>

<FrameWidth>1280</FrameWidth>

</FrameWidthList>

<FrameHeightList>

<FrameHeight>720</FrameHeight>

</FrameHeightList>

<FrameRateList>

<FrameRate>24:1</FrameRate>

</FrameRateList>

<Codec>

<Id>h264</Id>

<ColorEncoding>YCbCr.4:2:2</ColorEncoding>

<Profile>baseline</Profile>

</Codec>

<Colorimetry>

<ColorSpace>custom</ColorSpace>

<CodeRange>head</CodeRange>

</Colorimetry>

</Video>

<Audio>

<Codec>

<Id>aac</Id>

</Codec>

<SoundfieldList/>

</Audio>

</MasterDeliverySpecification>For a detailed description of the Output Preset file format see: MT-TN-20 Output Preset File Format Specification

4.7. Understanding logs

There are various objects that can be manipulated by TORNADO. These objects are entities upon which the process can execute an action, such as jobs, output-presets, watch-folders or workers. Moreover, each object has it’s own dedicated manager. The role of the manager is to handle the object’s lifecycle.

The actions that happen at the manager level and at the object level can generate events. This difference is reflected in the way logs can be accessed via the REST API: The /<object_type>/log/<object_type><object_UUID>/log

For example, in the case of job processing, the object is the job itself while the manager handles the different stages required for executing the type of task represented by the job.

When a job is created, like the case of a transcoding job, a series of steps are executed in order to validate it’s properties and create the delivery engine.

If an error was made in the request structure, like using the wrong execution context, then an error is raised only at the manager level and an event is generated. The event can be consulted by calling the /jobs/log

{

"events": [

{

"timestamp": "2022-02-07T11:13:53.111+00:00",

"severity": "error",

"message": "Processing not supported for context",

"code": 8

}

],

"status": "ok"

}If an error was made at the codec quality level when configuring a preset, then an error is raised while validating the codec constraints. This error will generate one or more events at the job object level and can be consulted by calling the /jobs/<job_UUID>/log

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"severity": "error",

"message": "Video codec quality not supported!",

"code": 8

},

{

"timestamp": "2022-02-07T12:29:44.820+00:00",

"severity": "error",

"message": "Cannot create delivery engine",

"info": "D:/TEST_FOOTAGE/DCP/DCP/sintel-with-markers",

"code": 2

}

],

"status": "ok"

}The first event is generated by the codec quality validation failure that, in turn, causes an error in creating the delivery engine which generates another event. These errors will cause the job process to fail. Because job processing includes the validation and engine creation procedures, that event will be logged at the manager level accessible by calling the /jobs/log

{

"events": [

{

"timestamp": "2022-02-07T12:29:47.237+00:00",

"severity": "error",

"message": "Failed during job processing",

"code": 8

}

],

"status": "ok"

}5. About STORM node architecture

5.1. Manager and Worker nodes

When starting STORM Server in webserver mode, via the websrv

STORM Server can also be used as a manager node. In this case it has a registered list of worker nodes that handle the received jobs while the manager node is used to distribute jobs and control the worker pool.

5.2. Creating Worker nodes

A STORM Server worker node can be started as a subprocess of a manager node or as a standalone webserver.

A subprocess worker node can only be created by a manager node and it can only run locally on the same machine as the manager.

A standalone worker node can only be created by an external process and it can run locally or remotely.

In both cases a worker always needs to register with a manager node when started.

5.2.1. Starting Worker nodes as subprocesses

In order to start workers as subprocesses, a manager node must be first started, via the CLI, with the -workers

Example starting a manager node with 4 workers as subprocesses:

stormserver websrv -workers 45.2.2. Starting Worker nodes manually

A worker node can be started manually or via an automated script used by an external application. In this case prior knowledge of existing manager nodes and their addresses is required as a worker can be started only if it can register with a manager node.

Example starting a worker node with a given manager:

stormserver webwrk -address "http://192.168.1.10" -port 6060 -nodeaddress "http://192.168.10.1:8080"The above command will start a worker on a machine with the given addressportnodeaddress

5.3. Managing workers

In a standard configuration, STORM Server processes media via a list of queued jobs managed on a single, local node. These jobs are handled sequentially, one at a time.

There are cases when multiple nodes are required to handle jobs. In that case some of those nodes will be workers.

Workers can be managed by using the dedicated workers REST API available on the manager node on which they are registered.

STORM API Reference

1. Compositions

1.1. Obtain the list of compositions

Storm maintains a list of compositions that can be obtained at any time. The following example shows you how to obtain the list of compositions registered on a given node:

URL: /compositions METHOD: GET

| Access | Details |

|---|---|

URL |

/compositions |

Query Params |

To be added |

Method |

GET |

1.2. Add a new composition

The following example shows you how to add a new composition to the list managed by a specific node:

| Access | Details |

|---|---|

URL |

/compositions |

Method |

POST |

1.2.1. Request

curl -X POST -H "Content-Type: text/xml" -T E:/simple_composition.xml “http://127.0.0.1:8080/compositions”1.2.2. Parameters

The command sends an XML composition to be added to the list of managed compositions. No other parameters are associated with this command.

1.2.3. Response

The response contains the status of the operation as well as the different timestamps associated with the request.

A UUID is assigned to the new composition and included in the response. This unique ID can be used for retrieval and further managing the composition after it’s creation.

{

"uuid": "ae81f492-04f5-43d1-b766-64dcbb97dc3b",

"name": "SimpleComposition",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/compositions/ae81f492-04f5-43d1-b766-64dcbb97dc3b.axf"

}1.3. Delete a composition

The following example shows you how to delete a composition from the list of compositions managed by a specific node:

| Access | Details |

|---|---|

URL |

/compositions/{composition UUID} |

METHOD |

DELETE |

1.3.1. Request

curl -X DELETE "http://127.0.0.1:8080/compositions/13ff097c-d4c9-43c0-a272-fb69a7a062e0"1.3.2. Response

The response is the status of the operation.

{

"status" : "ok",

}The following table shows the possible status codes that this function can return

| Status | Details |

|---|---|

ok |

The composition was successfully deleted. |

error |

The composition cannot be found in the list of managed processing graphs by this node. |

busy |

The composition is currently in use and cannot be deleted. |

2. Color Management System

2.1. Using the CMS parameters

The Color Management System in TORNADO defines the working color space of the composition. It can be used via the CLI or the REST API for performing colorimetric transformations, like color space conversions when transcoding files.

The CMS works in 2 steps. The first step is determining the source’s properties. TORNADO detects automatically, on a best effort basis, the input’s properties. This step is automatic and can be modified only by using a CutList or an AXF file. The second step is the definition of the target Color Management settings. If not specified then the native settings of the composition, detected during the first step, are used.

In order to override the default target Color Management System a series of parameters must be specified. If not specified the detected source value will be used.

2.2. Color Management System Parameters

| Parameter | Type | Details |

|---|---|---|

system |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the working CMS system to be used. |

version |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the version of the CMS system to be used. |

workflow |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the HDR workflow to be used. |

primaries |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the Color Primaries to be used. |

eotf |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the Electro-Optical Transfer Curve also called gamma curve to be used. |

matrix |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the Coding Equations to be used. |

cat |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the Chromatic Adaptation to be used. |

dtm |

tag |

A fixed text string value, defined in a controlled vocabulary, see Enums, defining the Dynamic Tone Mapping Targets to be used. |

dtmtarget |

tag |

Create a media information report in various file formats |

Below is an example of using CMS override parameters, via the CLI or REST APIs, to define the target CMS for a DCP composition used to generate an MPEG4 h264 proxy:

2.3. Command Line Interface example

stormserver xcode -i "E:/SOURCE/DCP" -preset "E:/PRESETS/preset_proxy_h264.xml" -cmssystem "MTCMS" -cmsworkflow "custom" -cmsprimaries "ITU.BT.709" -cmseotf "ITU-R.BT.709" -cmscat "bradford" -o "E:/RESULT/dcp_proxy.mp4"2.4. REST API example

{

"context" : "xcode",

"input" : "E:/SOURCE/DCP",

"cms" : {

"system" : "MTCMS",

"workflow" : "custom",

"primaries" : "ITU.BT.709",

"eotf" : "ITU-R.BT.709",

"cat" : "bradford"

},

"preset" : "E:/PRESETS/preset_proxy_h264.xml",

"output" : "E:/RESULT/dcp_proxy.mp4"

}This example will override the detected native CMS settings by defining the target CMS. This will cause a colorimetric transformation using the provided values. In this specific case the proxy will be encoded to the Rec709 color space.

The first step is to specify the CMS system. By default this property has the value NATIVE indicating that the composition’s detected CMS should be used. This parameter should be overriden in order to be able to define a custom workflow. If you select MTCMS, then you must specify the working color space (primaries), the transfer curve (eotf) and the chromatic adaptation (cat). These parameters will define the target CMS.

The transformation to the target CMS is made based on the source parameters. If no metadata is available at the moment of source characterisation then Rec709 is used by default.

3. Enumerables

3.1. Enumerable objects and their use

Certain values in the file formats specific to TORNADO, like Output Presets or AXFs, use a predefined controlled vocabulary. These values are reflected in the various enumerable objects accessible via the CLI or the REST API. The list of the currently available objects is subject to a continuous revision and extension.

While the structure of an enumerable object might vary slightly, there are element types that are common too all enum objects. One of these elements is the tag

3.2. Enumerate objects

The following example shows you how to list the currently available enumerable objects:

| Access | Details |

|---|---|

URL |

/enum/<enum_object_name> |

Method |

GET |

3.2.2. Enumerable object names

| Object Name | Description |

|---|---|

masters |

Enumerates the available mastering formats |

specifications |

Enumerates the available specifications |

shims |

Enumerates the available shims |

shimversions |

Enumerates the available shim versions |

formats |

Enumerates the available input and output formats |

colorspaces |

Enumerates the available color-spaces |

cmssystem |

Enumerates the available CMS systems |

cmsworkflow |

Enumerates the available CMS workflows |

cmsprimaries |

Enumerates the available CMS color primaries |

cmseotf |

Enumerates the available CMS electro-optical transfer functions |

cmsmatrix |

Enumerates the available CMS coding equations |

cmscat |

Enumerates the available CMS chromatic adaptations |

cmsdtm |

Enumerates the available CMS dynamic tone mappings |

cmsdtmt |

Enumerates the available CMS dynamic tone mapping targets |

The following request lists the available masters.

curl -X GET “http://127.0.0.1:8080/enum/masters”3.2.3. Response

The response is the status of the operation as well as an array containing the available masters:

{

"masters": [

{

"name": "AICP Master",

"description": "Export AICP Master",

"tag": "AICP"

},

{

"name": "Apple iTunes Package",

"description": "Export Apple iTunes Package",

"tag": "AIT"

},

...

{

"name": "YUV4MPEG2 Master",

"description": "Export YUV4MPEG2 Master",

"tag": "Y4M"

}

],

"status": "ok"

}4. Nodes Control

4.1. Controlling nodes via the REST API

STORM Server nodes can be controlled via the dedicated REST API. The API can be used to put in place automated polling mechanisms that check the status, shuts down or restarts nodes.

4.2. Check the status of a node

The following example shows you how to check the status of a node:

| Access | Details |

|---|---|

URL |

/control/status |

Method |

GET |

4.3. Shutting down a node

Shutting down a node will terminate the process and all managed workers registered with this node.

|

This operation will block until all registered nodes will be shutdown. This should be taken into account when implementing timeouts for this call. |

The following example shows you how to shutdown a node:

| Access | Details |

|---|---|

URL |

/control/shutdown |

Method |

POST |

5. Jobs

5.1. Obtain the list of jobs

Storm maintains a list of jobs that can be obtained at any time. This is the easiest method to get the status of all the running or pending jobs. The following example shows you how to obtain the list of jobs currently running or pending on a given node:

| Access | Details |

|---|---|

URL |

/jobs |

METHOD |

GET |

5.2. Get job description

The following example shows you how to retrieve the details for a given job:

| Access | Details |

|---|---|

URL |

/jobs/<job_UUID> |

Method |

GET |

5.2.2. Response

The response is the status of the operation as well as the UUID assigned to the new job. This unique ID can be used for further job manipulation requests:

{

"job": {

"uuid": "b9206f48-42a3-4557-a80e-1912160b776e",

"priority": 0,

"status": "queued",

"requesttimestamp": "2021-02-17T21:44:49+00:00",

"input": "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99.mxf",

"output": "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99_metadata.xml",

"format": "xml",

"context": "mediainfo"

},

"status": "ok"

}5.3. Add a new job

The following example shows you how to add a new job to the list of jobs of a specific node:

| Access | Details |

|---|---|

URL |

/jobs |

Method |

POST |

5.3.2. Parameters

| Parameter Name | Value | Description |

|---|---|---|

context |

string |

Mandatory parameter. Defines the operational context of the job. See Operational Contexts for more information. |

input |

string |

Mandatory parameter. The path of the input file or folder for the job. |

output |

string |

Mandatory parameter. The path of the output file or folder for the job. |

master |

string |

Optional parameter. A fixed text string value defined in a controlled vocabulary. It cannot be used together with the |

format |

string |

Optional parameter. A fixed text string value defined in a controlled vocabulary that defines the container format (e.g. "quicktime"). It cannot be used together with the |

preset |

string |

Optional parameter. The path of the xml file containing the parameter definition. It cannot be used together with the |

cms |

object |

Optional parameter. A JSON object defining the composition’s ColorManagementSystem and it’s properties. |

The following request exports the file metadata in XML. See MT-TN-10 Report XML File Format Specification for more information on the document structure.

{

"context" : "mediainfo",

"input" : "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99.mxf",

"output" : "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99_metadata.mxf",

"format" : "xml"

}5.3.3. Response

The response is the status of the operation as well as the UUID assigned to the new job. This unique ID can be used for further job manipulation requests:

{

"uuid": "b9206f48-42a3-4557-a80e-1912160b776e",

"priority": 0,

"status": "queued",

"requesttimestamp": "2021-02-17T21:44:49+00:00",

"input": "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99.mxf",

"output": "F:/ALEXA_Mini_LF_MXFARRIRAW/F004C003_190925_MN99_metadata.xml",

"format": "xml",

"context": "mediainfo"

}5.4. Abort and delete a running job

Running a DELETE HTTP request once will abort the job while running it a second time it will completely remove the job from the internal list.

The following example shows you how to abort or delete a running job for a specific node:

| Access | Details |

|---|---|

URL |

/jobs/<jobs_UUID> |

Method |

DELETE |

5.4.2. Response

The first time the DELETE request is sent the response status is "aborted" if the job exists.

{

"status" : "aborted"

}The second time the DELETE request is sent the response status is "ok" if the job exists.

{

"status" : "ok"

}| Status | Details |

|---|---|

aborted |

The command has been successfully received and the job is being aborted. |

ok |

The job was successfully deleted. |

error |

The job cannot be found in the list of jobs on this node. |

5.5. Obtaining a job’s log

The following example shows you how to obtain a job’s log for a specific object:

| Access | Details |

|---|---|

URL |

/jobs/<jobs_UUID>/log |

Method |

GET |

5.6. Obtaining logs for all jobs

The following example shows you how to obtain the list of events that happened at the job manager’s level:

| Access | Details |

|---|---|

URL |

/jobs/log |

Method |

GET |

5.6.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Master type not supported",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Master type not supported",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Master type not supported",

"severity": "error",

"code": 2

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}6. Processing Graphs

6.1. Obtain the list of processing graphs

Storm maintains a list of processing graphs that can be obtained at any time.

The following example shows you how to obtain the list of processing graphs currently registered on a given node:

| Access | Details |

|---|---|

URL |

/processinggraphs |

Method |

GET |

6.2. Add a new processing graph

The following example shows you how to add a new processing graph to the list of managed by a specific node:

| Access | Details |

|---|---|

URL |

/processinggraphs |

Method |

POST |

6.2.1. Request

curl -X POST -H "Content-Type: text/xml" -T E:/processing_graph_example.xml “http://127.0.0.1:8080/processinggraphs”6.2.2. Parameters

The command sends an XML processing graph to be added to the list of managed processing graphs. No other paramters are associated with this command.

6.2.3. Response

The response is the status of the operation as well as the UUID assigned to the new processing graph. This unique ID can be used for further managing the processing graph

{

"uuid" : "7d16441a-45ec-4891-8f78-37e582f18c5f",

"name" : "ProcessingGraphExample",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/processinggraphs/7d16441a-45ec-4891-8f78-37e582f18c5f.xml"

}6.3. Delete a processing graph

The following example shows you how to delete a processing graph from the list of processing graphs managed by a specific node:

| Access | Details |

|---|---|

URL |

/processinggraphs/<processinggraph_UUID> |

METHOD |

DELETE |

6.3.1. Request

curl -X DELETE "http://127.0.0.1:8080/processinggraphs/13ff097c-d4c9-43c0-a272-fb69a7a062e0"6.3.2. Response

The response is the status of the operation.

{

"status" : "ok",

}The following table shows the possible status codes that this function can return

| Status | Details |

|---|---|

ok |

The processing graph was successfully deleted. |

error |

The processing graph cannot be found in the list of managed processing graphs by this node. |

busy |

The processing graph is currently in use and cannot be deleted. |

6.4. Obtaining a processing-graph’s log

The following example shows you how to obtain a processing-graph’s log for a specific object:

| Access | Details |

|---|---|

URL |

/processinggraphs/<UUID>/log |

Method |

GET |

6.5. Obtaining logs for all processing-graphs

The following example shows you how to obtain the list of events that happened at the processing-graph manager’s level:

| Access | Details |

|---|---|

URL |

/processinggraphs/logs |

Method |

GET |

6.5.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Invalid request ContentType",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot write Processing Graph file",

"severity": "error",

"code": 2

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}7. Output Presets

7.1. Obtain the list of Output Presets

Storm maintains a list of output presets that can be obtained at any time.

The following example shows you how to obtain the list of output presets currently registered on a given node:

| Access | Details |

|---|---|

URL |

/outputpresets |

Method |

GET |

7.2. Add a new Output Preset

The following example shows you how to add a new output preset to the list:

| Access | Details |

|---|---|

URL |

/outputpresets |

Method |

POST |

7.2.1. Request

curl -X POST -H "Content-Type: text/xml" -T E:/output_preset_example.xml “http://127.0.0.1:8080/outputpresets”7.2.2. Parameters

The command sends an XML output preset to be added to the list of managed presets. No other parameters are associated with this command.

7.2.3. Response

The response is the status of the operation as well as the UUID assigned to the new output preset. This unique ID can be used for further managing the preset.

{

"uuid": "a51f1483-217d-4ddb-a926-4d13d6ffc309",

"name": "TestPreset",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/outputpresets/a51f1483-217d-4ddb-a926-4d13d6ffc309.xml"

}7.3. Delete a Output Preset

The following example shows you how to delete a output preset from the list of output presets managed by a specific node:

| Access | Details |

|---|---|

URL |

/outputpresets/<outputpreset_UUID> |

METHOD |

DELETE |

7.3.1. Request

curl -X DELETE "http://127.0.0.1:8080/outputpresets/13ff097c-d4c9-43c0-a272-fb69a7a062e0"7.3.2. Response

The response is the status of the operation.

{

"status" : "ok",

}The following table shows the possible status codes that this function can return

| Status | Details |

|---|---|

ok |

The output preset was successfully deleted. |

error |

The output preset cannot be found in the list of managed output presets by this node. |

7.4. Obtaining an output preset’s log

The following example shows you how to obtain an output-preset’s log for a specific object:

| Access | Details |

|---|---|

URL |

/outputpresets/<UUID>/log |

Method |

GET |

7.5. Obtaining logs for all output presets

The following example shows you how to obtain the list of events that happened at the output-preset manager’s level:

| Access | Details |

|---|---|

URL |

/outputpresets/logs |

Method |

GET |

7.5.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Invalid request ContentType",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot write Processing Graph file",

"severity": "error",

"code": 2

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}8. Overlays

8.1. Overlays

Overlays are XML descriptions that define the format for text and image supposed to be overlayed on top of a transcoding job’s result.

The following example shows an overlay that describes a text element, using a tag element to capture the clip’s name, that is positioned 5% from the left side and 5% from the top side of the image container and is horizontaly left aligned and centered vertically:

<?xml version="1.0" encoding="UTF-8" ?>

<MTOverlaysTemplate>

<Text hpos="5" vpos="5" halign="left" valign="bottom">NAME: $ClipName$</Text>

</MTOverlaysTemplate>For a detailed description of the Overlay file format see: MT-TN-50 Overlay File Format Specification

8.2. Add a new Overlay

The following example shows you how to add a new overlay to the list:

| Access | Details |

|---|---|

URL |

/overlays |

Method |

POST |

8.2.1. Request

curl -X POST -H "Content-Type: text/xml" -T E:/overlay_example.xml “http://127.0.0.1:8080/overlays”8.2.2. Parameters

The command sends an XML overlay to be added to the list of managed overlays. No other parameters are associated with this command.

8.2.3. Response

The response is the status of the operation as well as the UUID assigned to the new overlay. This unique ID can be used for further managing the overlay.

{

"uuid": "dad697dd-da6a-4b5b-befe-85142e46e79f",

"name": "TestOverlay",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/overlays/dad697dd-da6a-4b5b-befe-85142e46e79f.xml"

}8.3. Delete a Overlay

The following example shows you how to delete an overlay from the managed list for a specific node:

| Access | Details |

|---|---|

URL |

/overlays/<overlay_UUID> |

METHOD |

DELETE |

8.3.2. Response

The response is the status of the operation.

{

"status" : "ok",

}The following table shows the possible status codes that this function can return

| Status | Details |

|---|---|

ok |

The overlay was successfully deleted. |

error |

The overlay cannot be found in the managed list for this node. |

8.4. Obtain the list of Overlays

Storm maintains a list of overlays that can be obtained at any time.

The following example shows you how to obtain the list of overlays currently registered on a given node:

| Access | Details |

|---|---|

URL |

/overlays |

Method |

GET |

8.4.2. Response

{

"overlays": [

{

"uuid": "7370d3d8-7bc8-4900-a304-9e24ebcbe1c6",

"name": "",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/overlays/7370d3d8-7bc8-4900-a304-9e24ebcbe1c6.xml"

},

{

"uuid": "d39a5944-065f-470d-8b5f-fb914f2e03d8",

"name": "",

"filename": "<user-home>/AppData/Roaming/Marquise Technologies/mediabase/TORNADO/session/overlays/d39a5944-065f-470d-8b5f-fb914f2e03d8.xml"

}

],

"status": "ok"

}8.5. Obtaining an overlay’s log

The following example shows you how to obtain an overlay’s log for a specific object:

| Access | Details |

|---|---|

URL |

/overlays/<UUID>/log |

Method |

GET |

8.6. Obtaining logs for all overlays

The following example shows you how to obtain the list of events that happened at the overlay manager’s level:

| Access | Details |

|---|---|

URL |

/overlays/logs |

Method |

GET |

8.6.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Invalid request ContentType",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot create Overlay (not enough memory)",

"severity": "error",

"code": 1

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}9. Watchfolders

9.1. Obtain the list of watchfolders

Storm maintains a list of watchfolders that can be obtained at any time. This is the easiest method to get the status of all the watchfolders that are monitored along with their status.

The following example shows you how to obtain the list of watchfolders currently registered on a given node:

| Access | Details |

|---|---|

URL |

/watchfolders |

Method |

GET |

9.1.2. Response

{

"status" : "ok",

"watchfolders" :

[

{

"uuid" : "4e64937f-b254-4e52-af73-48f8a4674feb",

"status" : "active",

"processgraphid" : "509f9f3a-4c4d-43a7-a2ac-a85c764f63c3",

"input" : "E:/MEDIA_SOURCE",

"output" : "E:/MEDIA_DESTINATION"

},

{

"uuid" : "e0e5c68d-18bf-49b3-aa11-cc0ce838c8fc",

"status" : "paused",

"processgraphid" : "fadd6541-f81c-4be4-add6-f5b265b69411",

"input" : "E:/RECEIVED_FILES",

"output" : "E:/PROCESSED_FILES"

}

]

}9.2. Add a new watchfolder

The following example shows you how to add a new watchfolder to the list of watchfolders monitored by a specific node:

| Access | Details |

|---|---|

URL |

/watchfolders |

Method |

POST |

9.2.2. Parameters

| Parameter Name | Value | Description |

|---|---|---|

name |

string |

Mandatory parameter. Custom defined name of the watchfolder. |

preset |

string |

Mandatory parameter. Mutually exclusive with |

input |

string |

Mandatory parameter. The path to the folder that should be used as input. |

output |

string |

Mandatory parameter. The path to the folder that should be used as output. |

context |

string |

Mandatory parameter. Defines the operational context of the jobs to be handled by the current watchfolder. See Operational Contexts for more information. |

filters |

array |

Optional parameter. List of extension filter objects to be applied to the input folder. |

fileextension |

string |

Mandatory parameter in a |

The following example creates a watchfolder named "MOV_MXF_folder" that transcodes all files, respecting the extension filers, by using the passed preset and context to define the job properties.

{

"name" : "MOV_MXF_folder",

"preset" : "E:/presets/preset_IMF_NFX_2016_1080p_stereo.xml",

"input" : "E:/RECEIVED_FILES",

"output" : "E:/PROCESSED_FILES",

"context" : "xcode",

"filters" :

[

{

"fileextension" : "mxf"

},

{

"fileextension" : "mov"

}

]

}9.2.3. Response

The response is the status of the operation as well as the UUID assigned to the new watchfolder. This unique ID can be used for further managing the watchfolder

{

"uuid": "3b3fc808-0053-4a20-beae-c8344e8aa384",

"priority": 0,

"status": "active",

"name": "MOV_MXF_folder",

"requesttimestamp": "2021-12-01T17:17:44+00:00",

"input": "E:/RECEIVED_FILES",

"output": "E:/PROCESSED_FILES",

"preset": "E:/presets/preset_IMF_NFX_2016_1080p_stereo.xml",

"context": "xcode"

}9.3. Delete a watchfolder

The following example shows you how to delete a watchfolder from the list of monitored watchfolders on a specific node:

| Access | Details |

|---|---|

URL |

/watchfolders/{watchfolder UUID} |

METHOD |

DELETE |

9.3.1. Request

curl -X DELETE "http://127.0.0.1:8080/watchfolders/13ff097c-d4c9-43c0-a272-fb69a7a062e0"9.3.2. Response

The response is the status of the operation.

{

"status" : "ok",

}The following table shows the possible status codes that this function can return

| Status | Details |

|---|---|

ok |

The watchfolder was successfully deleted. |

error |

The watchfolder cannot be found in the list of monitored watchfolders by this node. |

9.4. Changing the status of a watchfolder

The following example shows you how to change the status of a watchfoldermonitored for a specific node:

| Access | Details |

|---|---|

URL |

/watchfolders/<watchfolder_uuid> |

Method |

PUT |

9.4.1. Request

curl -X PUT -H "Content-Type: application/json" -d '{"status":"paused"}' “http://127.0.0.1:8080/watchfolders/887b12ee-30f1-4059-afff-db3e89a4e270”10. Workers

10.1. Obtaining the list of workers

STORM Server maintains a list of managed workers that can be obtained at any time. The following example shows you how to obtain the list of workers being managed by the current node:

| Access | Details |

|---|---|

URL |

/workers |

METHOD |

GET |

10.1.2. Response

The response is an array containing the workers descriptors as well as the status of the operation:

{

"status" : "ok",

"workers": [

{

"uuid": "8c80d6d9-f8a6-445f-828d-6f0bc8edcc5b",

"address": "http://localhost",

"port": 65535

},

{

"uuid": "77038177-5fa4-471b-a5ec-e18d1f3cb9e9",

"address": "http://localhost",

"port": 65534

},

{

"uuid": "556e68b6-b185-4980-91ac-b57bdefe4152",

"address": "http://192.168.1.250",

"port": 9090

},

{

"uuid": "a016a364-5a86-4f4d-98f4-abe53e0a8275",

"address": "http://192.168.1.150",

"port": 9090

}

]

}10.2. Add a new worker

The following example describes the API for adding a new worker to the manager’s worker list.

|

This API is automatically called when a worker is either spawned locally or created remotely (see Manager and Worker nodes). It should not be used by an external application, safe for testing purposes. |

| Access | Details |

|---|---|

URL |

/workers |

Method |

POST |

10.2.2. Parameters

| Parameter Name | Value | Description |

|---|---|---|

address |

string |

Mandatory parameter. Defines the address of the worker node. |

port |

number |

Mandatory parameter. The port on which the worker node will communicate. |

pid |

number |

Mandatory parameter. The Process ID of the worker node assigned by the Operating System. |

{

"address": "http://localhost",

"port": 6060,

"pid": 10252

}10.2.3. Response

The response is the status of the operation as well as the UUID assigned to the new worker. This unique ID can be used for further worker manipulation requests. In case there was an error in the process then the response will only contain the status of the operation set to "error"

{

"uuid": "b9206f48-42a3-4557-a80e-1912160b776e",

"status": "ok"

}10.3. Delete a running worker

The following example shows you how to delete a specific worker from the manager’s node list.

|

Using this API will also call the control shutdown API (see Shutting down a node) with the worker’s parameters. As such, the status code of this operation will be the logical conjunction of the delete and shutdown operations. |

| Access | Details |

|---|---|

URL |

/workers/{worker UUID} |

METHOD |

DELETE |

10.4. Obtaining a worker’s log

The following example shows you how to obtain a worker’s log for a specific object:

| Access | Details |

|---|---|

URL |

/workers/<worker_UUID>/log |

Method |

GET |

10.4.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Invalid UUID.",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot shutdown Worker (worker unreachable)",

"severity": "error",

"code": 7

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}10.5. Obtaining logs for all workers

The following example shows you how to obtain the list of events that happened at the worker manager’s level:

| Access | Details |

|---|---|

URL |

/workers/logs |

Method |

GET |

10.5.2. Response

{

"events": [

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot find Worker",

"severity": "error",

"code": 2

},

{

"timestamp": "2022-02-07T12:29:41.604+00:00",

"message": "Cannot register Worker (not enough memory)",

"severity": "error",

"code": 1

}

],

"status": "ok"

}An empty "events" array may be returned in the case when there’s nothing to be reported.

{

"events": [],

"status": "ok"

}TECHNICAL NOTES

1. MT-TN-10 Report XML File Format Specification

1.1. Introduction

1.1.1. Overview

This document describes the Marquise Technologies Report XML file format used for various reporting purposes among the Marquise Technology product line. Among these purposes, the report is generated when validating various file formats, or simply to obtain detailed information on those.

The file format is based on the eXtensible Markup Language (XML) and uses its conventions and structures.

1.1.2. Scope

This specification is intended to give a reference to developers and implementers of this file format. As the format evolves to include new features, this specification is subject to frequent updates.

1.1.3. Document Organization

The specification is divided in various sections with a strong focus on implementation.

The last section of the document provides a detailed description of the various types used by the file format. These types are either simple types or complex types. Complex types contain a description of the sub-elements they contain.

The types are listed in alphabetic order.

1.2. AssetDescriptorType

1.2.1. Elements

| Element Name | Type | Description |

|---|---|---|

AssetKind |

a tag uniquely identifying the type of the asset |

|

SourceDescriptor |

a source descriptor that describes the location and storage properties of the asset |

1.2.2. AssetKindEnum

The AssetKindEnum is an enumeration of fixed UTF-8 values that indicate the kind of the asset. Typically the kind of the asset must be compatible with the type of the essence referenced in a composition playlist track, otherwise it’s considered as an error.

| Value | Description |

|---|---|

unknown |

any asset for which the kind cannot be determined has this value |

picture |

the asset contains a single video track. |

picture.s3d |

the asset contains a single stereoscopic video track. |

sound |

the asset contains a single audio track. |

timedtext |

the asset contains a single timed text track. |

immersiveaudio |

the asset contains a single immersive audio track. |

data |

the asset contains a single data track. |

combined |

the asset contains multiple tracks with various essences. |

pkl |

the asset is a packing list. |

cpl |

the asset is a composition playlist. |

opl |

the asset is an output profile list. |

scm |

the asset is a sidecar composition map. |

1.4. AssetDescriptorListType

The AssetDescriptorList element is defined by the AssetDescriptorListType and contains an ordered list of AssetDescriptor elements. Each AssetDescriptor element is defined by the AssetDescriptorType.

Below is an example of a AssetDescriptorList:

...

<AssetDescriptorList>

<AssetDescriptor>

...

</AssetDescriptor>

...

<AssetDescriptor>

...

</AssetDescriptor>

</AssetDescriptorList>

...1.5. AudioDescriptorType

1.5.1. Elements

| Element Name | Type | Description |

|---|---|---|

ChannelCount |

UInt32 |

a value representing the number of audio channels in the essence referenced by the parent track. |

SoundfieldConfiguration |

UTF-8 string |

a tag representing the configuration formed by the audio channels in the track. The tag is based on the SMPTE ST377-4 Multichannel Audio framework MCATagSymbol, using the "sg" prefix. |

Annotation |

UTF-8 string |

An optional user-defined annotation text string |

Title |

UTF-8 string |

An optional text string as specified in SMPTE ST377-41 |

TitleVersion |

UTF-8 string |

An optional text string as specified in SMPTE ST377-41 |

TitleSubVersion |

UTF-8 string |

An optional text string as specified in SMPTE ST377-41 |

Episode |

UTF-8 string |

An optional text string as specified in SMPTE ST377-41 |

ContentKind |

UTF-8 string |

A tag representing the content kind as specified in SMPTE ST377-41 |

ElementKind |

UTF-8 string |

A tag representing the element kind as specified in SMPTE ST377-41 |

PartitionKind |

UTF-8 string |

A tag representing the partition kind as specified in SMPTE ST377-41 |

PartitionNumber |

UTF-8 string |

A text string representing the partition number as specified in SMPTE ST377-41 |

ContentType |

UTF-8 string |

A tag representing the content type as specified in SMPTE ST377-41 |

ContentSubType |

UTF-8 string |

A tag representing the content subtype as specified in SMPTE ST377-41 |

ContentDifferentiator |

UTF-8 string |

An optional user-defined text string representing the content differentiator as specified in SMPTE ST377-41 |

UseClass |

UTF-8 string |

A tag representing the use class as specified in SMPTE ST377-41 |

SpokenLanguageAttribute |

UTF-8 string |

A tag representing the attribute of the main spoken language as specified in SMPTE ST377-41 |

1.7. CompositionDescriptorType

1.7.1. Elements

| Element Name | Type | Description |

|---|---|---|

Name |

UTF-8 string |

a human readable text string containing the name of the composition as stored in the subject |

Annotation |

UTF-8 string |

A human readable text containing comments associated with the composition |

Issuer |

UTF-8 string |

A human readable text that identifies the entity that produced the composition |

IssueDate |

xs:dateTime |

creation date and time of the composition |

Creator |

UTF-8 string |

a human readable text string containing the name of the tool used to create the composition |

Language |

main composition language |

|

Duration |

UInt64 |

A 64-bit unsigned interger containing the number of edit units in the composition |

EditRate |

Rational |

A rational containing the edit rate of the composition (e.g. frame rate) |

TimecodeDescriptor |

A timecode descriptor defining the start timecode of the composition |

1.8. ComplianceTestPlanListType

The ComplianceTestPlanList element is defined by the ComplianceTestListType and contains an ordered list of ComplianceTestPlan elements. Each ComplienceTestPlan element is defined by the ComplianceTestPlanType.

Below is an example of a ComplianceTestPlanList:

...

<ComplianceTestPlanList>

<ComplianceTestPlan>

...

</ComplianceTestPlan>

...

<ComplianceTestPlan>

...

</ComplianceTestPlan>

</ComplianceTestPlanList>

...1.9. ComplianceTestPlanType

The ComplienceTestPlan is defined by the ComplianceTestPlanType and contains an ordered list of TestSequence elements. Each TestSequence element is defined by the TestSequenceType.

Below is an example of a ComplianceTestPlan:

...

<ComplianceTestPlan type=<type> version=<version>>

<TestSequence type=<type>>

...

</TestSequence>

...

<TestSequence type=<type>>

...

</TestSequence>

</ComplianceTestPlan>

...1.12. DataRateDescriptorType

1.12.1. Elements

| Element Name | Type | Description |

|---|---|---|

AverageBytesPerSecond |

UInt32 |

A 32-bit non-null unsigned integer containing the average bytes per second |

AverageSampleSize |

UInt32 |

A 32-bit non-null unsigned integer containing the average sample size in bytes |

MaximumBytesPerSecond |

UInt32 |

A 32-bit non-null unsigned integer containing the maximum bytes per second |

MaximumSampleSize |

UInt32 |

A 32-bit non-null unsigned integer containing the maximum sample size in bytes |

MinimumSampleSize |

UInt32 |

A 32-bit non-null unsigned integer containing the minimum sample size in bytes |

1.13. EncodingDescriptorType

1.13.1. Elements

| Element Name | Type | Description |

|---|---|---|

Codec |

UTF-8 string |

a tag uniquely identifying the codec used by the parent essence descriptor |

Profile |

UTF-8 string |

a tag uniquely identifying the codec profile used by the parent essence descriptor |

Level |

UTF-8 string |

a tag uniquely identifying the codec profile level used by the parent essence descriptor |

1.14. EssenceDescriptorType

1.14.1. Elements

| Element Name | Type | Description |

|---|---|---|

Duration |

UInt64 |

A 64-bit unsigned interger containing the number of edit units in the underlying essence. |

SampleRate |

Rational |

A rational containing the sample rate of the underlying essence. |

Language |

main essence language |

|

EncodingDescriptor |

A complex type describing the codec parameters used to encode the essence |

|

DataRateDescriptor |

An optional complex type describing the essence data rate |

1.14.2. Attributes

| Attribute Name | Type | Description |

|---|---|---|

type |

UTF-8 string |

a tag uniquely identifying the type of essence described |

1.14.3. About SampleRate

For video essence descriptors, the sample rate is equal to the edit rate of the track that the essence descriptor belongs to. However, if the essence is stereoscopic, the sample rate is the double of the track’s edit rate. For instance, a stereoscopic video track that has an edit rate of 24 frames per second, will have it’s essence sample rate set to 48, indicating that 2 samples (i.e. 2 frames) are played for every edit unit in the track.

For audio essence descriptors, the sample rate is representing the audio sample rate (typically 48kHz).

Unless specified otherwise, other tracks have their sample rate specified in video frame units.

1.15. ImmersiveAudioDescriptorType

1.15.1. Elements

| Element Name | Type | Description |

|---|---|---|

SoundfieldConfiguration |

UTF-8 string |

a tag representing the immersive audio configuration in the track. The tag is based on the SMPTE ST377-4 Multichannel Audio framework MCATagSymbol (typically IAB). |

Annotation |

UTF-8 string |

An optional user-defined annotation text string |

Title |

UTF-8 string |

An optional text string as specified in SMPTE ST377-41 |

TitleVersion |

UTF-8 string |